Should You Measure the Positive or the Negative?

by Stacey Barr |Many believe that we should measure the positive (what we want) rather than the negative (what we don’t want). Should we always?

Like the terminals on a battery, positive and negative might be opposites. But that doesn’t mean that one is better than the other – they are both needed for the battery to do its job. In our everyday language, however, we use these words

synonymously with good and bad.

This good and bad meaning extends into performance measurement, which makes it feel wrong to measure the negative, to measure what we don’t want. Shouldn’t we measure only what we want?

There are three common arguments people use for why we should avoid measuring the negative. But each argument kind of misses the point about what performance measurement’s purpose is to us, and misses the positives of measuring the negative.

Argument 1: Where attention goes, energy flows.

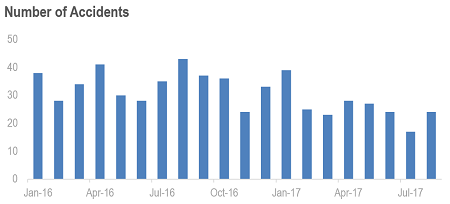

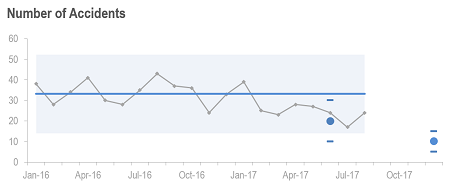

One argument against measuring in the negative is that it keeps our attention on what we don’t want. This would mean that if we measure workplace accidents, then our attention is on injuring people rather than keeping them safe.

But really, why can’t our attention be on the shrinking gap between the current injury level and zero, through a trajectory of improvement targets? Our energy, then, could flow in the direction of finding the solutions that continue to shrink that gap.

Energy flows towards the decisions we make. And performance measures exist to help us decide on the best action to get closer to the results we want.

Measures are not self-fulfilling prophecies – we will not get more workplace accidents just because we measure them. We don’t measure just to monitor; we measure to learn how to reach the results we want.

Tip: For every performance measure, positive or negative, rather than judging the measure’s values as good or bad, learn how to send those values in the direction you want.

Argument 2: Bad news is depressing.

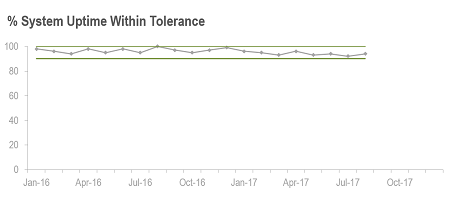

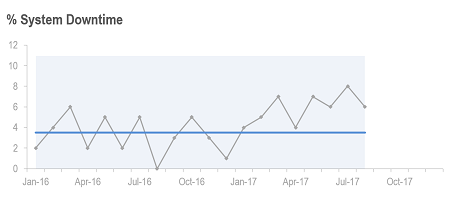

Another argument for measuring the positive rather than the negative is that it’s depressing to monitor what we don’t want. But the role of KPIs is not to manage our mood. It’s to incite action. Anyway, it’s much more depressing to suddenly have a huge system crash to deal with because we monitored the percent of system uptime within tolerance…

… and failed to see the small but significant lead indication of an increase in system downtime, which is evident in this XmR chart:

Particularly with rare events or small failure rates, signals of change can be swamped and drowned out when we only monitor the positive.

The good news about measures is not what they tell us. The good news about measures is what we do with the information they tell us.

Tip: When you want to improve rare events, whether those events are positive or negative, measure those events directly so you can more easily see even subtle changes in the direction they’re going in.

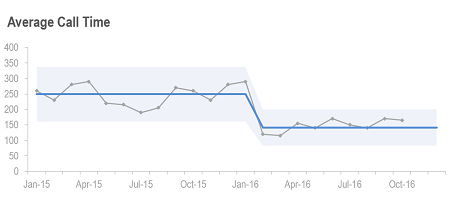

Argument 3: Up should always mean good.

In dashboards and reports, many people like to have all the measures framed so up means good and it’s thus faster to interpret each measure. This is typical of organisations that monitor performance by the tick-and-flick method, to get through the measures as quickly as possible. But they miss the insight that comes from the story the measures tell collectively.

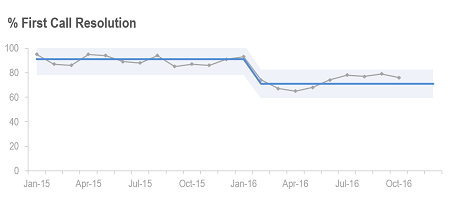

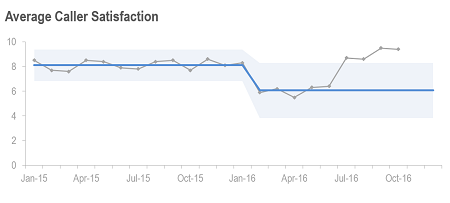

What story do these three call centre measures tell you?

They tell me that whatever the call centre team did to reduce call handling time, it had an adverse impact on the rate that customer issues were resolved in the first call. And we see that echo in the caller satisfaction trend. But after a few months, it seems the team has discovered how to resolve more calls more quickly, within the first call.

Measures work together as characters in a story, and each measure has its own personality. We need to spend time with each measure; appreciate its interaction with others, and only interpret it within that context.

Tip: Think of each measure more as a character in a bigger story that involves other related measures, and seek to understand the story that they collectively tell.

It’s best not to set blanket rules about how to measure.

We want our measures to focus us on improving performance, and that means they need to give us quick and accurate signals of change, and trigger us in to action when action is needed. That’s much more important than whether we’re measuring the positive or the negative, or which direction is good or bad. It’s not that trivial.

Is there any debate about measuring the positive or negative in your organisation? What are the arguments for and against? And do you see any insights being missed as a consequence? Explore this topic more in this version by Louise Watson, Regional PuMP Partner for North America, where she also shares a few comebacks to these arguments.

Don’t get distracted by whether to measure the positive rather than the negative. Keep your focus on learning how to send them in the direction you want. [tweet this]

Connect with Stacey

Haven’t found what you’re looking for? Want more information? Fill out the form below and I’ll get in touch with you as soon as possible.

167 Eagle Street,

Brisbane Qld 4000,

Australia

ACN: 129953635

Director: Stacey Barr